IT School

Learn to programming in

C

C++

Java

You will create your own unique IT projects!

In the three most popular programming languages under the guidance of IT practitioners.

C/C++

cout << “Hello, world!” << endl;

Java

System.out.println(“Hello Java”);

C#

Console.WriteLine("Hello World!");

Recognize your strengths and areas for improvement. Don't be afraid to contact us with any questions!

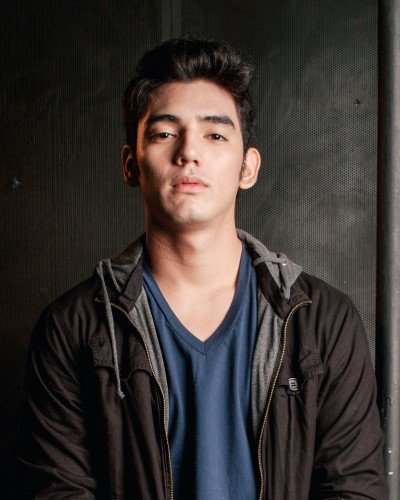

Charles, CO-FOUNDER

Our Advantages

IT PROGRAMMING SCHOOL

Convenient location

We are located near the subway. You will not have to spend time on the road

Quality and affordability

We are constantly improving course programs and reviewing prices

Small groups

In our groups of 2 to 3 people, which allows us to pay attention to everyone

Cheaper with a friend

If you bring a friend, everyone gets a 50% discount on tuition

Employment

Graduates have the opportunity to find employment in leading IT companies

Working weekends

To make it possible for you to combine training and work

Practical Teachers

Our teachers are experienced practitioners with extensive experience

Corporate Training

We know how to make your employees more efficient

Local Time

C# programming under .NET

Get everything you need to get started in the .NET development profession as a Junior Software Engineer

Gain skills in C# development.

Create your own application using the .NET platform.

Latest News

Show our blog

How to Build a Fintech Mobile App: Trends, Features and Costs – fintech mobile app development

In 2021, the fintech industry experienced a significant surge, due…

Answers to the Most Common Questions We Get Regarding Spam

These days, phone call and text spam has become an…

Pricing

Choose your plan

Our Team

You couldn't get in better hands

Francisco Colmenero

CEO & Developer

Contact Us

3005 Hiddenview Drive

Plymouth Meeting, PA

P: +1 215-962-2757

1052 Duncan Avenue

Whitestone, NY

P: +1 917-579-9459

What Students Say

Very best school

Partnerships Proud To Be a Part Of

The Image Size Checker by Sitechecker is a free tool that gives you the possibility to find out the image size and issues with it – not just for the specific URL, but also for your full site. We also offer how-to-fix instructions for this kind of issue.

The Image Size Checker by Sitechecker is a free tool that gives you the possibility to find out the image size and issues with it – not just for the specific URL, but also for your full site. We also offer how-to-fix instructions for this kind of issue.

Outsource web development to PSD2HTML – a white-label development agency with more than 15 years of experience in serving web coding needs for small and medium digital and marketing agencies around the world.

Outsource web development to PSD2HTML – a white-label development agency with more than 15 years of experience in serving web coding needs for small and medium digital and marketing agencies around the world.

Call tracking services help businesses measure their advertising effectiveness. They use dedicated tracking phone numbers to differentiate incoming calls to determine which campaigns are generating the most qualified leads. Data from these numbers allows businesses to measure the ROI of their campaigns and optimize future spending for maximum impact.

Call tracking services help businesses measure their advertising effectiveness. They use dedicated tracking phone numbers to differentiate incoming calls to determine which campaigns are generating the most qualified leads. Data from these numbers allows businesses to measure the ROI of their campaigns and optimize future spending for maximum impact.

Navigate through this test automation guide for practical insights on steering clear of common pitfalls.